The Mistake of Using 3rd Party Site Metrics for Backlink Qualification

Table of Contents

Third-party domain metrics like domain authority, domain rating, and trust flow are unreliable for determining the quality of a backlink.

However, many SEOs still rely on these metrics, particularly Domain Authority, to decide the quality of external domains.

Why?

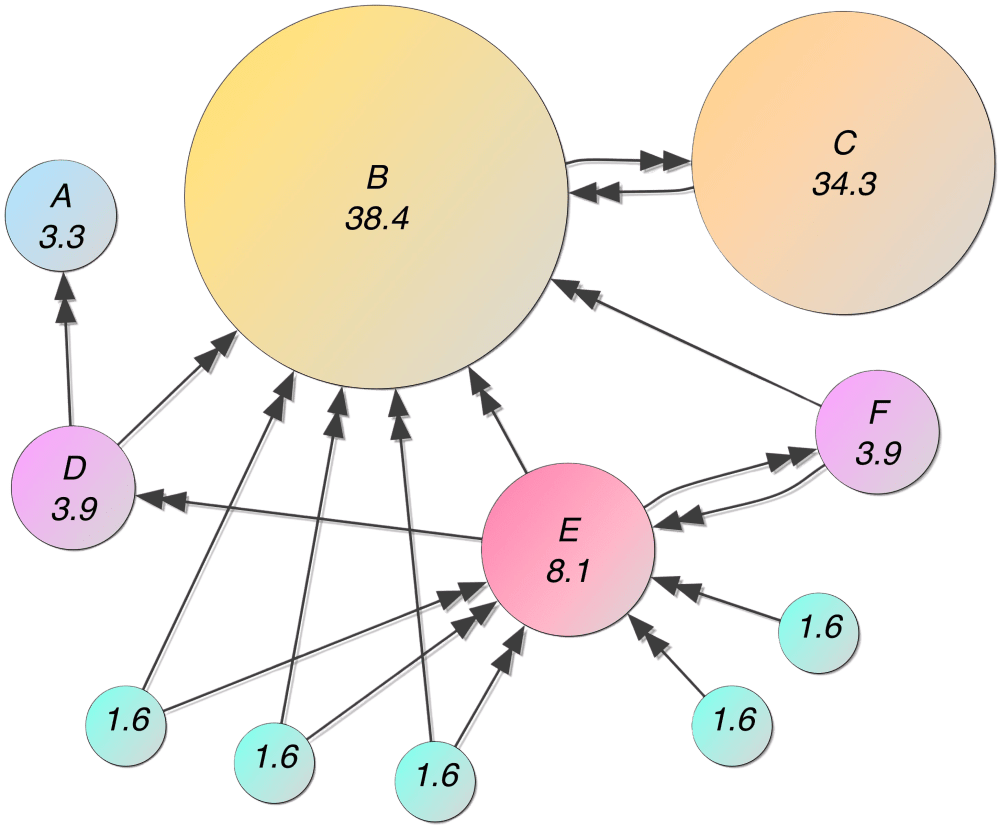

A site’s backlink graph can significantly influence its rankings within search results and 3rd party tools only provide limited insight into how links are weighted by Google.

While metrics such as DA is commonly used to qualify backlink partners and high-quality guest post link opportunities, its importance is overstated and far too much trust is placed in its measurements.

Are SEO & Domain Metrics Trustworthy?

The short answer, no.

While third party metrics do provide some indicator of site quality, you cannot trust them to guide decision making. The scores indicate what should happen, not what will happen. Don’t rely on predictions that are based on a limited scope of data.

According to Moz, “Domain Authority is not a metric used by Google in determining search rankings and has no effect on the SERPs.”

Third-party SEO metrics are not equal to PageRank or other Google metrics. They are neither used by nor endorsed by Google.

There is no reason to believe they are a ranking factor or even something Google considers.

So why trust these metrics to advise us?

SEOs need a tangible measurement. Google’s PageRank used to provide specific metrics measuring quality, but Google has since stopped providing PR metrics externally, though PageRank is still used in internal Google algorithms.

With Google’s quality metric gone from public view, SEOs are looking to fill the void with a solid indicator of how a site is performing. Don’t fall into this problematic trap.

Why Relying on Domain Metrics is a Problem

Depending on these metrics can lead you astray while developing a backlinking strategy, as well as evaluating domains for acquisition. There are massive inconsistencies between metrics and actual site quality. These inconsistencies impact domain quality, leading to both overestimations and underestimations.

You might be surprised to learn inaccuracies happen all the time.

But inaccuracies should be expected, which is why reliance is an issue. Google’s RankBrain Algorithm has been ever-changing and learning since 1993. Many of these metrics have been around only half that time. How can third party metrics even come close to an algorithm that’s been learning for the last 27 years? The complexity of the algorithm cannot be matched. Here’s why:

Too Simplistic: Only Evaluate 20% of Relevant Factors

Fundamentally, there are issues with third party domain metrics and quality scores because they are not designed to analyze every single piece of data. While Google’s algorithm is designed to crawl the vast reaches of the web to gather and analyze all data, the algorithms used to quantify third party metrics are simplistic in comparison. They do not gather enough data for a complete, realistic picture. Each tool only has a piece of the puzzle.

If we want to give weight to these metrics, domains must be evaluated on the same scale that search engines use. According to Moz.com, MOZ’s DA is, “meant to approximate how competitive a given site is” on Google. However the metrics are nowhere near apples to apples, because the evaluation process is different.

Currently, MOZ combines over 40 website factors to figure a site’s DA score. While this may seem like enough, Google evaluates over 200 ranking factors. MOZ is only evaluating 20% of the factors that Google considers relevant.

You cannot trust metrics that are evaluating considerably less factors than real search engines. These third party tools are only seeing a part of the deeper picture. What if the missing pieces provide the greatest impact?

These tools cannot calculate at the complexity which website authority and quality are actually determined. While DA 2.0 is live, it still offers a far more simplistic scope than that utilized by search engines. When tools don’t have every piece of information, they provide an inaccurate metric.

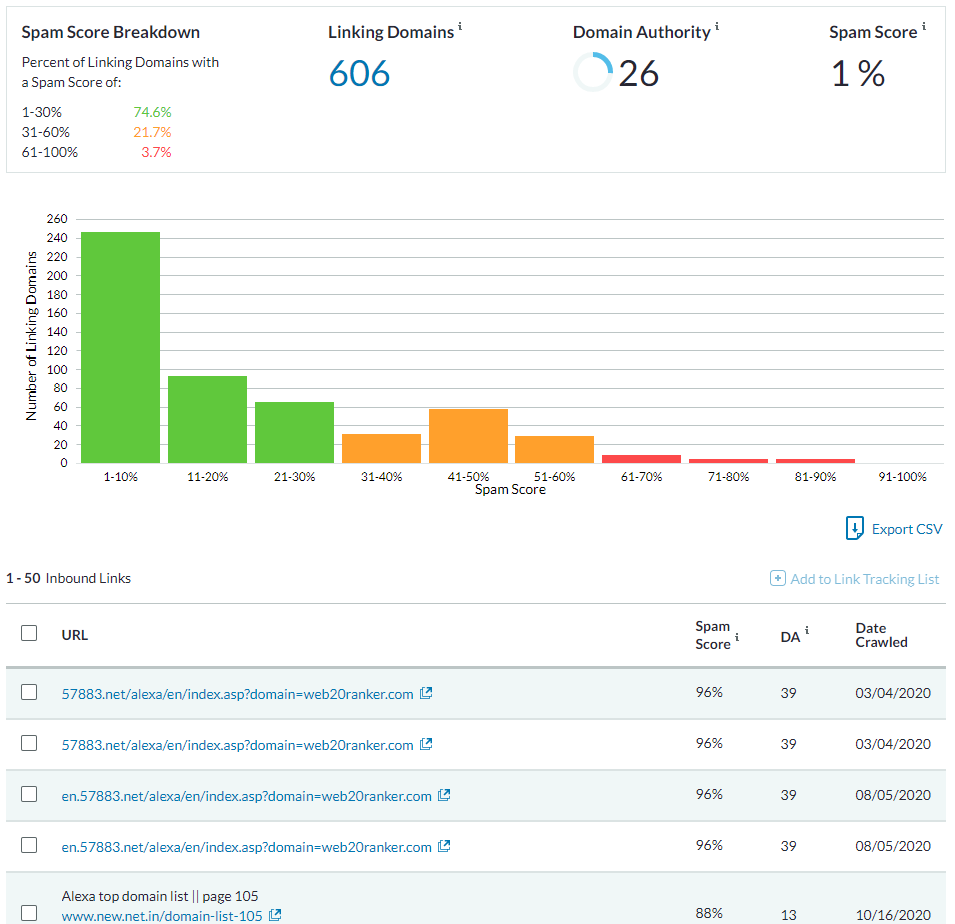

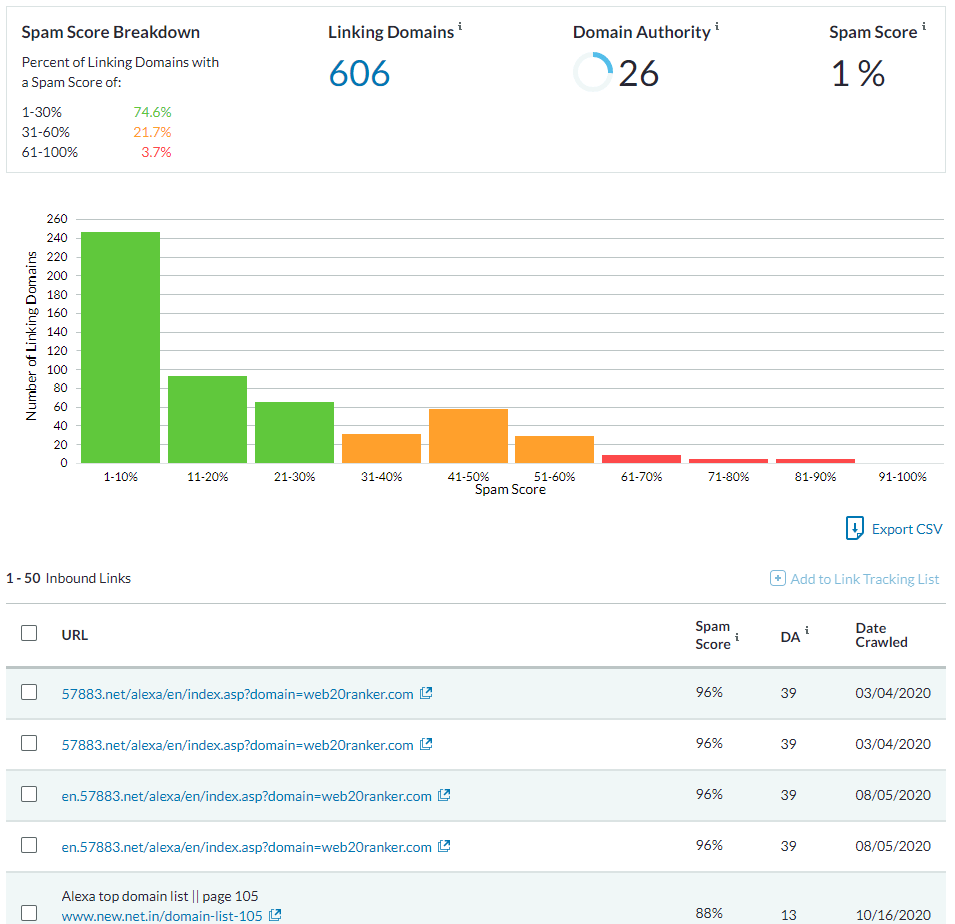

Missed Backlinks: Not Enough Resources

These third party tools don’t have the resources to crawl the web in its entirety. They are incapable of finding every single backlink. Instead, they base their metrics off backlink sample sets and estimations.

Third party tools are not equipped to evaluate every backlink, so it’s common for backlinks to not be counted when evaluating a domain’s metits. You cannot trust them to provide a complete picture.

If you compare a domain’s backlink count across different tools, chances are each count is different. There is no way to be sure which count is accurate. What if the missed backlinks are actually the highest quality links pointing to the site? This can greatly impact the judgement of site quality.

Metrics are Predictions

Despite what many SEOs think, third party metrics are not based on measurable performance in SERPs. Domain metrics are only predictions of how well a domain should perform on Google based on analyzed domain variables.

Predictions can be wrong or misleading. These forecasts are never guaranteed to be correct and can leave out important factors that may impact rankings and quality. More often than not, there are discrepancies in the stated (predicted) DA & actual DA because it isn’t based on past results, but rather assumptions that fill in data gaps. This is stated by aHrefs themselves:

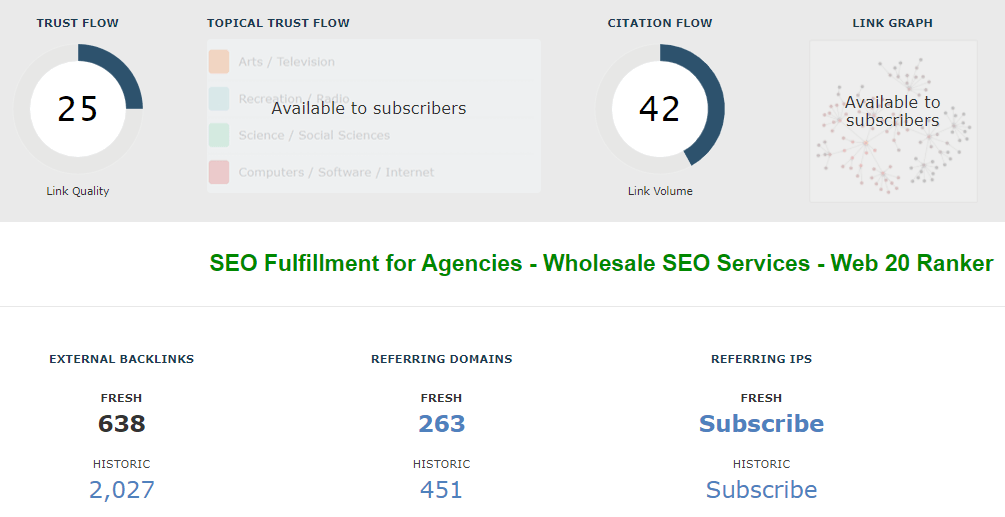

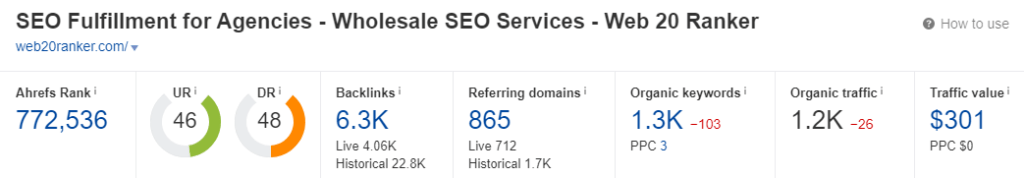

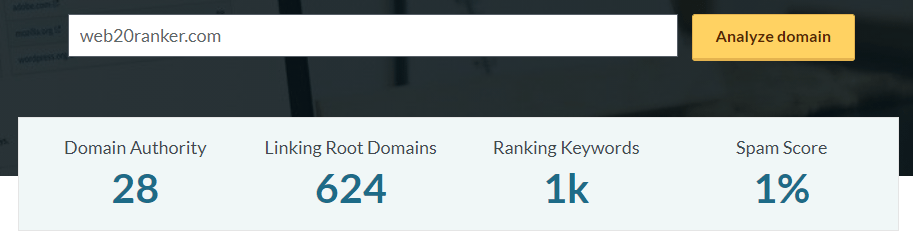

Inconsistencies Between Tools

Another important factor is the inconsistency between platforms and their respective domain tools. When you compare domain scores and backlink audits from different SEO tools like SEMRush, Ahrefs, Majestic SEO, and Moz, they hardly ever match up. A domain’s backlink count, referring domains, and overall domain score will differ between tools due to many factors, including:

- Different crawl timelines

- Utilizing different crawlers

- Measuring different metrics

- Different quality formulas & algorithms

Each platform utilizes a different crawler, so the results will vary. Would you trust metrics that give different scores for the same domain?

Metrics Can be Manipulated

If you’re considering purchasing a domain, there are tactics site owners can use to manipulate DA for higher prices. Those looking to attract backlinks can also manipulate the metrics.

Here’s a real life example. Julie Joyce of Link Fish Media, “grabbed a small list of sites in their Do Not Contact Database and checked to see what their DA was [after DA 2.0 was implemented]. [She] expected the DA to be very low on these sites as many of them are in the database because they are constantly spamming everyone with emails offering to sell links and almost all of them openly sell links on their sites. Surprisingly, about 75% of them still had a DA higher than 30.”

Moz’s updated DA tool didn’t recognize the low quality of these spammy sites. They were manipulating the metrics to look like high quality domains, when in reality they are not high quality sites.

Google Warns Against Heavy Reliance on One Metric

Even Google found fault focusing on a single metric too much…

Google has stated that a single score or metric does not take into account enough factors to accurately determine rankings. A combination of factors will always be used.

While PageRank was once the be-all-end-all metric for websites, that time has come and gone. Google began utilizing PageRank heavily beginning in 2000, providing a quantifiable PageRank score. However, Google determined that relying on one metric didn’t accurately match quality content to search queries. So they removed it.

Even when PageRank existed, Google didn’t want to rely only on this one metric. They combined this metric with other factors to get a better overall understanding. You should apply this same idea.

How to Avoid Overreliance on Metrics

Don’t Use One Metric: Use a Holistic Approach

It’s wise to take the metrics with a grain of salt. While Moz has updated their DA metric to increase trustworthiness, it’s still not enough. Many quality scores do not match the reality in search engines. You must employ a more well-rounded evaluation.

Utilize Manual Evaluation & Common Sense

Do manual evaluation. There are many other indicators you can reference to avoid being led astray by third party metrics. Look at a site’s linking strategy, content quality, and overall domain to determine it’s worth. With manual discovery, you may find a site that ranks high, but has low metrics. Similarly, we see sites that have high scores but rank for few keywords.

Use common sense when judging the quality and authority of a domain. While the evaluation won’t be as detailed or perfect as Google, don’t forget what truly matters – relevance, engagement, and traffic.

Consider quickly looking through the site to see if the backlink will be relevant and content is high-quality. Does the domain rank for target keywords? Relevancy, rankings and content quality are more impactful than a domain quality score….

Looking for Relevance is Key

While a site quality is important, there are other factors that significantly impact the value of a link or domain. Relevance to your own site matters as much, if not more than quality. If you get a backlink from a high DA site, but it is completely irrelevant to your content, chances are it won’t impact rankings.

Use common sense to determine if a backlink is relevant to you…

- Does the link compliment your content?

- Is the link out of place?

- Does it provide relevant information to your audience?

- Is there natural flow?

High-Quality Content Increases Audience

If your link is included in quality content, chances are your audience will grow. While the link juice passed between domains can give you a ranking boost, so can the direct ranking factors that will be impacted by increased traffic.

High-quality content can reduce bounce rate, increase time on page, and lead to more return visitors. All these combined can increase your rankings.

Check the Rankings for Domains

Misleading Metrics Lead to Ill-Advised Strategy

Don’t write off sites because of low DA, DR or other quality metrics. Look at all scores to get an overall picture. While third party metrics like DA & Trust Flow can be useful in getting an overall idea of site quality, you should not focus on them heavily. Even if there’s low scores across the board, the site may still be ranking well and attracting a meaningful amount of traffic.

These SEO metrics can mislead you to overvalue or undervalue a website! Your link building can be negatively impacted if you put too much weight into any one metric – or all of them.

A website’s quality third party metrics aren’t everything. They don’t give you a complete picture. It’s like making a judgement with only part of the information, which can lead you down the wrong path.

Remember, Google has never divulged their 200+ ranking factors. No one knows what they’re judging based on. They utilize so many factors in decision making, because quality and authority can’t be boiled down to a number.

This is why Web 2.0 Ranker cautions against relying on third party metrics. Even if you plan to continue utilizing these metrics, employ manual discovery and common sense when evaluating the merits of domains.

Leverage the GMB Ranking Framework

Boost Your Prominence and Maps Visibility